How to build a simple Hidden Markov Models

How to build a Simple Hidden Markov Models with Pomegranate

In this article, we will be using the Pomegranate library to build a simple Hidden Markov Model

The HHM will be based on an example from the book Artificial Intelligence: A Modern Approach:

You are the security guard stationed at a secret under-ground installation. Each day, you try to guess whether it’s raining today, but your only access to the outside world occurs each morning when you see the director coming in with, or without, an umbrella.

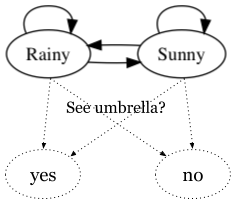

A simplified diagram of the required network topology is shown below.

Describing the Network

\lambda = (A, B) specifies a Hidden Markov Model in terms of an emission probability distribution A and a state transition probability distribution B.

HMM networks are parameterized by two distributions: the emission probabilities giving the conditional probability of observing evidence values for each hidden state, and the transition probabilities giving the conditional probability of moving between states during the sequence. Additionally, you can specify an initial distribution describing the probability of a sequence starting in each state.

At each time t, Xt represents the hidden state, and Yt represents an observation at that time.

In this problem, t corresponds to each day of the week and the hidden state represent the weather outside (whether it is Rainy or Sunny) and observations record whether the security guard sees the director carrying an umbrella or not.

For example, during some particular week the guard may observe an umbrella ['yes', 'no', 'yes', 'no', 'yes'] on Monday-Friday, while the weather outside is ['Rainy', 'Sunny', 'Sunny', 'Sunny', 'Rainy']. In that case, t =Wednesday, tWednesday=yes, and XWednesday=Sunny. (It might be surprising that the guard would observe an umbrella on a sunny day, but it is possible under this type of model.)

Initializing an HMM Network with Pomegranate

The Pomegranate library supports two initialization methods. You can either explicitly provide the three distributions, or you can build the network line-by-line. We'll use the line-by-line method for the example network, but you're free to use either method for the part of speech tagger.

# create the HMM model

model = HiddenMarkovModel(name="Example Model")

Implementation - Add the Hidden States

When the HMM model is specified line-by-line, the object starts as an empty container. The first step is to name each state and attach an emission distribution.

Observation Emission Probabilities: P(Yt | Xt)

We need to assume that we have some prior knowledge (possibly from a data set) about the director's behavior to estimate the emission probabilities for each hidden state. In real problems you can often estimate the emission probabilities empirically, which is what we'll do for the part of speech tagger. Our imaginary data will produce the conditional probability table below. (Note that the rows sum to 1.0)

| yes | no | |

|---|---|---|

| $Sunny$ | 0.10 | 0.90 |

| $Rainy$ | 0.80 | 0.20 |

# create the HMM model

model = HiddenMarkovModel(name="Example Model")

# emission probability distributions, P(umbrella | weather)

sunny_emissions = DiscreteDistribution({"yes": 0.1, "no": 0.9})

sunny_state = State(sunny_emissions, name="Sunny")

# above & use that distribution to create a state named Rainy

rainy_emissions = DiscreteDistribution({"yes": 0.8, "no": 0.2})

rainy_state = State(rainy_emissions, name="Rainy")

# add the states to the model

model.add_states(sunny_state, rainy_state)

assert rainy_emissions.probability("yes") == 0.8, "The director brings his umbrella with probability 0.8 on rainy days"

print("Looks good so far!")

Adding Transitions

Once the states are added to the model, we can build up the desired topology of individual state transitions.

Initial Probability P(X0):

We will assume that we don't know anything useful about the likelihood of a sequence starting in either state. If the sequences start each week on Monday and end each week on Friday (so each week is a new sequence), then this assumption means that it's equally likely that the weather on a Monday may be Rainy or Sunny. We can assign equal probability to each starting state by setting P(X0=Rainy) = 0.5 and P(X0=Sunny)=0.5:

| Sunny | Rainy |

|---|---|

| 0.5 | 0.5 |

State transition probabilities P(Xt | Xt-1)

Finally, we will assume for this example that we can estimate transition probabilities from something like historical weather data for the area. In real problems you can often use the structure of the problem (like a language grammar) to impose restrictions on the transition probabilities, then re-estimate the parameters with the same training data used to estimate the emission probabilities. Under this assumption, we get the conditional probability table below. (Note that the rows sum to 1.0)

| Sunny | Rainy | |

|---|---|---|

| Sunny | 0.80 | 0.20 |

| Rainy | 0.40 | 0.60 |

# create edges for each possible state transition in the model

# equal probability of a sequence starting on either a rainy or sunny day

model.add_transition(model.start, sunny_state, 0.5)

model.add_transition(model.start, rainy_state, 0.5)

# add sunny day transitions (we already know estimates of these probabilities

# from the problem statement)

model.add_transition(sunny_state, sunny_state, 0.8) # 80% sunny->sunny

model.add_transition(sunny_state, rainy_state, 0.2) # 20% sunny->rainy

# TODO: add rainy day transitions using the probabilities specified in the transition table

model.add_transition(rainy_state, sunny_state, 0.4) # 40% rainy->sunny

model.add_transition(rainy_state, rainy_state, 0.6) # 60% rainy->rainy

# finally, call the .bake() method to finalize the model

model.bake()

assert model.edge_count() == 6, "There should be two edges from model.start, two from Rainy, and two from Sunny"

assert model.node_count() == 4, "The states should include model.start, model.end, Rainy, and Sunny"

print("Great! You've finished the model.")

Checking the Model

The states of the model can be accessed using array syntax on the HMM.states attribute, and the transition matrix can be accessed by calling HMM.dense_transition_matrix(). Element $(i, j)$ encodes the probability of transitioning from state $i$ to state $j$. For example, with the default column order specified, element $(2, 1)$ gives the probability of transitioning from "Rainy" to "Sunny", which we specified as 0.4.

Run the next cell to inspect the full state transition matrix, then read the .

column_order = ["Example Model-start", "Sunny", "Rainy", "Example Model-end"] # Override the Pomegranate default order

column_names = [s.name for s in model.states]

order_index = [column_names.index(c) for c in column_order]

# re-order the rows/columns to match the specified column order

transitions = model.dense_transition_matrix()[:, order_index][order_index, :]

print("The state transition matrix, P(Xt|Xt-1):")

print(transitions)

print("\nThe transition probability from Rainy to Sunny is {:.0f}%".format(100 * transitions[2, 1]))

Inference in Hidden Markov Models

In this section, we'll use this simple network to quickly go over the Pomegranate API to perform the three most common HMM tasks:

- Calculate Sequence Likelihood

- Decoding the Most Likely Hidden State Sequence

- Forward likelihood vs Viterbi likelihood

Implementation - Calculate Sequence Likelihood

Calculating the likelihood of an observation sequence from an HMM network is performed with the forward algorithm. Pomegranate provides the the HMM.forward() method to calculate the full matrix showing the likelihood of aligning each observation to each state in the HMM, and the HMM.log_probability() method to calculate the cumulative likelihood over all possible hidden state paths that the specified model generated the observation sequence.

# input a sequence of 'yes'/'no' values in the list below for testing

observations = ['yes', 'no', 'yes']

assert len(observations) > 0, "You need to choose a sequence of 'yes'/'no' observations to test"

# use model.forward() to calculate the forward matrix of the observed sequence,

# and then use np.exp() to convert from log-likelihood to likelihood

forward_matrix = np.exp(model.forward(observations))

# use model.log_probability() to calculate the all-paths likelihood of the

# observed sequence and then use np.exp() to convert log-likelihood to likelihood

probability_percentage = np.exp(model.log_probability(observations))

# Display the forward probabilities

print(" " + "".join(s.name.center(len(s.name)+6) for s in model.states))

for i in range(len(observations) + 1):

print(" <start> " if i==0 else observations[i - 1].center(9), end="")

print("".join("{:.0f}%".format(100 * forward_matrix[i, j]).center(len(s.name) + 6)

for j, s in enumerate(model.states)))

print("\nThe likelihood over all possible paths " + \

"of this model producing the sequence {} is {:.2f}%\n\n"

.format(observations, 100 * probability_percentage))

Output

Rainy Sunny Example Model-start Example Model-end

<start> 0% 0% 100% 0%

yes 40% 5% 0% 0%

no 5% 18% 0% 0%

yes 5% 2% 0% 0%

The likelihood over all possible paths of this model producing the sequence ['yes', 'no', 'yes'] is 6.92%

Implementation - Decoding the Most Likely Hidden State Sequence

The Viterbi algorithm calculates the single path with the highest likelihood to produce a specific observation sequence. Pomegranate provides the HMM.viterbi() method to calculate both the hidden state sequence and the corresponding likelihood of the viterbi path.

This is called "decoding" because we use the observation sequence to decode the corresponding hidden state sequence. In the part of speech tagging problem, the hidden states map to parts of speech and the observations map to sentences. Given a sentence, Viterbi decoding finds the most likely sequence of part of speech tags corresponding to the sentence.

We're going to use the same sample observation sequence as above, and then use the model.viterbi() method to calculate the likelihood and most likely state sequence. Let's compare the Viterbi likelihood against the forward algorithm likelihood for the observation sequence.

# input a sequence of 'yes'/'no' values in the list below for testing

observations = ['yes', 'no', 'yes']

# use model.viterbi to find the sequence likelihood & the most likely path

viterbi_likelihood, viterbi_path = model.viterbi(observations)

print("The most likely weather sequence to have generated these observations is {} at {:.2f}%."

.format([s[1].name for s in viterbi_path[1:]], np.exp(viterbi_likelihood)*100)

)

Output

The most likely weather sequence to have generated these observations is ['Rainy', 'Sunny', 'Rainy'] at 2.30%.

Forward likelihood vs Viterbi likelihood

The code below shows the likelihood of each sequence of observations with length 3, and compare with the viterbi path.

from itertools import product

observations = ['no', 'no', 'yes']

p = {'Sunny': {'Sunny': np.log(.8), 'Rainy': np.log(.2)}, 'Rainy': {'Sunny': np.log(.4), 'Rainy': np.log(.6)}}

e = {'Sunny': {'yes': np.log(.1), 'no': np.log(.9)}, 'Rainy':{'yes':np.log(.8), 'no':np.log(.2)}}

o = observations

k = []

vprob = np.exp(model.viterbi(o)[0])

print("The likelihood of observing {} if the weather sequence is...".format(o))

for s in product(*[['Sunny', 'Rainy']]*3):

k.append(np.exp(np.log(.5)+e[s[0]][o[0]] + p[s[0]][s[1]] + e[s[1]][o[1]] + p[s[1]][s[2]] + e[s[2]][o[2]]))

print("\t{} is {:.2f}% {}".format(s, 100 * k[-1], " <-- Viterbi path" if k[-1] == vprob else ""))

print("\nThe total likelihood of observing {} over all possible paths is {:.2f}%".format(o, 100*sum(k)))

Output

The likelihood of observing ['no', 'no', 'yes'] if the weather sequence is...

('Sunny', 'Sunny', 'Sunny') is 2.59%

('Sunny', 'Sunny', 'Rainy') is 5.18% <-- Viterbi path

('Sunny', 'Rainy', 'Sunny') is 0.07%

('Sunny', 'Rainy', 'Rainy') is 0.86%

('Rainy', 'Sunny', 'Sunny') is 0.29%

('Rainy', 'Sunny', 'Rainy') is 0.58%

('Rainy', 'Rainy', 'Sunny') is 0.05%

('Rainy', 'Rainy', 'Rainy') is 0.58%

The total likelihood of observing ['no', 'no', 'yes'] over all possible paths is 10.20%

Full code on Github. Click here!

This notebook is part of the NLP program provided by Udacity.

Happy coding!